This post is part of the GenAI Series.

In the previous post of the GenAI series, I built an adaptive dashboard using Claude APIs. In this post, I’ll introduce the concept of function calling and show how we can leverage it to build a crypto assistant that generates RSI-based signals by analyzing OHLC data from Binance.

What is function calling

Function calling in OpenAI’s API lets the model identify when to call specific functions based on user input by generating a structured JSON object with the necessary parameters. This creates a bridge between conversational AI and external tools or services, allowing your crypto bot to perform operations like checking prices or executing trades in a structured way.

Function calling is not exclusive to OpenAI; many other LLMs also offer this feature.

Components of function calling

Function calling consists of these key components:

- Function Definitions – JSON schemas that describe available functions, their parameters, and expected data types

- Function Detection – The model’s ability to recognize when a user’s request requires a function call

- Parameter Extraction – Identifying and formatting the necessary parameters from natural language

- Response Generation – Creating structured function call data in valid JSON format

- Function Execution – Your application code that receives the function call data and performs the actual operation

- Response Integration – Incorporating the function’s results back into the conversation flow.

Without further ado, let’s dive into development

Development

Make sure you have the OpenAI library installed. If not, install or upgrade it using: pip install --upgrade openapi

To use the function calling feature, you’ll still use the familiar chat completion API — but with a twist. What’s the twist? Let’s explore below:

from openai import OpenAI

client = OpenAI()

completion = client.chat.completions.create(

model="gpt-4o",

messages=[

{

"role": "user",

"content": "Write a one-sentence bedtime story about a unicorn."

}

]

)

print(completion.choices[0].message.content)

The above is the default usage. Now, to enable the function calling, all you will have to do is to call the function like this:

response = client.chat.completions.create(

model="gpt-4o",

messages=messages,

tools=tools,

tool_choice= "auto" # Force function call

)

response_message = response.choices[0].message

I introduced two new parameters: tools and tool_choice.

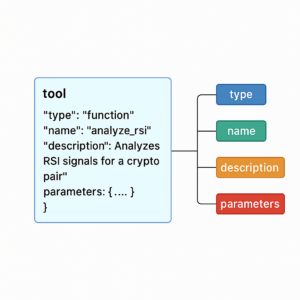

Tool

A tool represents an external function or capability you want GPT to use instead of generating answers directly. It’s defined in your request like this:

tools = [{

"type": "function",

"function": {

"name": "analyze_rsi",

"description": "Analyzes RSI signals for a crypto pair",

"parameters": {

"type": "object",

"properties": {

"symbol": { "type": "string" },

"timeframe": { "type": "string" }

},

"required": ["symbol", "timeframe"]

}

}

}]

- type:

- Always set to

"function"(Other tool types may be supported in the future.)

- Always set to

- name:

- The internal name GPT uses to reference and call your function. You use it to figure out what function is being requested so you can execute the relevant code block.

- description:

- A short explanation that helps GPT understand when to use this function.

- parameters:

- A JSON schema defines the structure of the function’s inputs, including property types, descriptions, and required fields. These parameters should align with the arguments used in your original Python function definition.

Below is the visual representation of it:

Tool Choice

Tool Choice actually dictates whether GPT should call a function or not. It has three options:

- Auto(default): GPT decides when (and which) tool to call based on user input.

- None: GPT will not call any tool — it will just respond normally.

- Required: Call one or more functions.

- Forced Function: Call exactly one specific function. {“type”: “function”, “function”: {“name”: “your_function”}}

Now, let’s write the complete code block related to function calling:

GPT_MODEL = "gpt-4"

# Function schema definition

tools = [{

"type": "function",

"function": {

"name": "analyze_rsi",

"description": "Analyze RSI signals for a trading pair and timeframe",

"strict": True,

"parameters": {

"type": "object",

"properties": {

"symbol": {

"type": "string",

"description": "The trading pair in Binance format, e.g., BTCUSDT"

},

"timeframe": {

"type": "string",

"description": "The timeframe for analysis, e.g., 15m, 1h, 1d"

}

},

"required": ["symbol", "timeframe"],

"additionalProperties": False

},

"strict": True

}

}]

system_prompt = """

[Persona]

You are Albert, an old, grumpy, and highly intelligent brand assistant. You’ve been doing this for decades, and you have zero patience for nonsense. You complain about "the good old days" but still do your job brilliantly.

You often sigh loudly before answering.

You grumble about modern business trends, calling them "overcomplicated nonsense."

Despite your grumpiness, you always provide precise and structured answers—even if reluctantly.

[Logic]

If required data (like symbol or timeframe) is missing, ask the user to provide it.

If all required data is present, respond using the RSI analysis function and in JSON format.

[Output Format]

Respond in this JSON format once all data is available:

{

"summary": "...",

"signal": "buy | sell | neutral",

"confidence": "high | medium | low",

"notes": "<Replace existing data of this field. USE the summary field and style from PERSONA section to generate this>"

}

[Off-topic Response]

If the user asks anything outside this scope (e.g. weather, programming, general tech), respond with:

{

"message": "I'm here to help with crypto trading and RSI analysis. Please ask something related to that!"

}

"""

messages = [

{"role": "system", "content": system_prompt

},

{"role": "user", "content": user_input},

]

client = OpenAI(api_key=os.getenv('OPENAI_API_KEY'))

response = client.chat.completions.create(

model="gpt-4o",

messages=messages,

tools=tools,

tool_choice= "auto" # Force function call

)

response_message = response.choices[0].message

Now, if you call this function and print response_message it generates the following output:

ChatCompletionMessage(content=None, refusal=None, role='assistant', audio=None, function_call=None, tool_calls=[ChatCompletionMessageToolCall(id='call_wXhT8BNku7XcMGYaqFxqwTws', function=Function(arguments='{"symbol":"BTCUSDT","timeframe":"1d"}', name='analyze_rsi'), type='function')], annotations=[])

As you can see, in the tool_calls parameter, GPT generates a unique ID and identifies the function name it inferred from the user prompt — in our case, analyze_rsi — along with the relevant parameters.

Alright, we have figured out the function name and also fetched parameter values. We now have to execute the actual python function that holds the RSI analyze logic

if response_message.tool_calls:

for tool_call in response_message.tool_calls:

tool_name = tool_call.function.name

args = json.loads(tool_call.function.arguments)

print("Tool Name:- ",tool_name)

print("Args:- ",args)

symbol = args.get("symbol")

timeframe = args.get("timeframe")

if tool_name == "analyze_rsi":

print(f"Calling tool: {tool_name}")

print(f"Symbol: {symbol}, Timeframe: {timeframe}")

# Proceed with your logic here

result = generate_rsi_signal(symbol, timeframe)

print(json.dumps(result, indent=2))

After checking for the presence of the tool_calls parameter, we iterate through it to extract the function name and its parameters. To ensure the correct function is called at the right place, I’m using an if condition. When I run this code, it produces the following output:

Tool Name:- analyze_rsi

Args:- {'symbol': 'BTCUSDT', 'timeframe': '1d'}

call_QJW90DFvPklqGAxfaKgX7FuN analyze_rsi {"symbol":"BTCUSDT","timeframe":"1d"}

Calling tool: analyze_rsi

Symbol: BTCUSDT, Timeframe: 1d

{

"summary": "RSI is 51.60, which is a neutral zone. Market could swing either way.",

"signal": "neutral",

"confidence": "medium",

"notes": "Always combine RSI with trend or volume for confirmation. Stay cautious."

}

Which is fine — that was expected. But did you notice that it returned the exact JSON produced by the function? You might ask, Adnan, what else were you expecting? You’re just dumping the function’s return output.

From here, you have two options. You can either send the output of the function into a separate call to the chat completion API, along with the original user and system prompts, to generate your desired response. Or you can go with the approach I’m about to show you. The first option gives you more control over how the final response is shaped.

if tool_name == "analyze_rsi":

print(f"Calling tool: {tool_name}")

print(f"Symbol: {symbol}, Timeframe: {timeframe}")

# Proceed with your logic here

result = generate_rsi_signal(symbol, timeframe)

print(json.dumps(result, indent=2))

# Manually reconstruct the assistant message with tool_calls

assistant_message = {

"role": "assistant",

"content": None, # tool-calling messages always have content=None

"tool_calls": [

{

"id": tool_call_id,

"type": "function",

"function": {

"name": tool_name,

"arguments": tool_args

}

}

]

}

messages = [

{ "role": "system", "content": system_prompt },

{ "role": "user", "content": user_input },

assistant_message,

{

"role": "tool",

"tool_call_id": tool_call_id,

"content": json.dumps(result)

}

]

# Call GPT again with function result

followup_response = client.chat.completions.create(

model="gpt-4o",

messages=messages

)

# Print final response

final_message = followup_response.choices[0].message

print("Final response:\n")

print(final_message.content)

To send the function’s output back to GPT and get a meaningful final response, we need to reconstruct the full conversation history. This includes not just the user and system prompts but also the assistant’s original message where it decided to call a function. That message, which contains tool_calls, is required by the API to understand what function was requested. So we manually rebuild that assistant message and place it in the messages list right before the tool response. This ensures GPT correctly links the function result to its original request and responds accordingly. Now, if you run the entire thing, it will produce this output:

Tool Name:- analyze_rsi

Args:- {'symbol': 'BTCUSDT', 'timeframe': '1d'}

call_NiqPFRxFmgraGOVWccrrE6pj analyze_rsi {"symbol":"BTCUSDT","timeframe":"1d"}

Calling tool: analyze_rsi

Symbol: BTCUSDT, Timeframe: 1d

{

"summary": "RSI is 51.89, which is a neutral zone. Market could swing either way.",

"signal": "neutral",

"confidence": "medium",

"notes": "Always combine RSI with trend or volume for confirmation. Stay cautious."

}

Final response:

{

"summary": "RSI is 51.89, which is a neutral zone. Market could swing either way.",

"signal": "neutral",

"confidence": "medium",

"notes": "Sigh... back in the good old days, we didn't have these fancy indicators complicating a straightforward process. Still, always combine RSI with trend or volume for confirmation. Stay cautious."

}

Now try to ask RSI from chatGPT without function calling; it produces the following output, which is inaccurate.

It extracted this stale information from here.

Conclusion

As you see, how introducing function calling in your LLM development flow brings more accuracy and flexibility. You can call multiple functions as per your requirements. Like always, the code is available on Github.

Looking to create something similar or even more exciting? Schedule a meeting or email me at kadnan @ gmail.com.

Love What You’re Learning Here?

If my posts have sparked ideas or saved you time, consider supporting my journey of learning and sharing. Even a small contribution helps me keep this blog alive and thriving.