In this post I am going to discuss how you can use decentralized IPFS in your Python apps for storing different kind of data.

What is IPFS?

From Wikipedia:

InterPlanetary File System (IPFS) is a protocol and network designed to create a content-addressable, peer-to-peer method of storing and sharing hypermedia in a distributed file system. IPFS was initially designed by Juan Benet, and is now an open-source project developed with help from the community.

In simple it’s Amazon S3 on Blockchain. All of your information is available on decentralized network across nodes thus not only make the system scaleable but reliable as well since data which is fed in it will be there hence no chance of 404-File not found kind of errors.

So there can be many possibilities how you can use it, essentially for file storage, you can use as web hosting, for messaging system, possibilities are endless.

Installation

Download the setup file from here. Since I am on OSX so I just unpacked go-ipfs_v0.4.13_darwin-amd64.tar.gz and executed the file install.sh which will transfer the binary file in /usr/local/bin

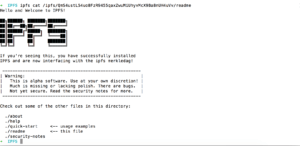

To make sure everything is setup properly, run ipfs --version or ipfs on your console. If all set then you can see something like below:

➜ IPFS ipfs --version

and it prints

ipfs version 0.4.13

OK it’s installed. Now, it’s time to initialize your local IPFS node. For that run the following command:

ipfs init➜ IPFS ipfs init initializing IPFS node at /Users/AdnanAhmad/.ipfs generating 2048-bit RSA keypair...done peer identity: QmaJcDSAceC6nDZ4CEz8F6mkiGcATjWbCE8JAfbiVxY7oW to get started, enter: ipfs cat /ipfs/QmS4ustL54uo8FzR9455qaxZwuMiUhyvMcX9Ba8nUH4uVv/readme

And when you run ipfs cat /ipfs/QmS4ustL54uo8FzR9455qaxZwuMiUhyvMcX9Ba8nUH4uVv/readme on command line it should show you something like below:

So, basically ipfs cat shows the content of the file. If you do ipfs get it will download it locally on your machine.

IPFS Daemon

When you run the command ipfs daemon it will start a node on your machine which will connect with other nodes available. The concept is similar to Blockchain that there are many nodes available and you start your own node which syncs with other nodes. If you want you can have a setup of private nodes by setting IPs in Swarm and Bootstrap section. For instance these are defaults:

"Addresses": {

"API": "/ip4/127.0.0.1/tcp/5001",

"Announce": [],

"Gateway": "/ip4/127.0.0.1/tcp/8080",

"NoAnnounce": [],

"Swarm": [

"/ip4/0.0.0.0/tcp/4001",

"/ip6/::/tcp/4001"

]

},

"Bootstrap": [

"/dnsaddr/bootstrap.libp2p.io/ipfs/QmNnooDu7bfjPFoTZYxMNLWUQJyrVwtbZg5gBMjTezGAJN",

"/dnsaddr/bootstrap.libp2p.io/ipfs/QmQCU2EcMqAqQPR2i9bChDtGNJchTbq5TbXJJ16u19uLTa",

"/dnsaddr/bootstrap.libp2p.io/ipfs/QmbLHAnMoJPWSCR5Zhtx6BHJX9KiKNN6tpvbUcqanj75Nb",

"/dnsaddr/bootstrap.libp2p.io/ipfs/QmcZf59bWwK5XFi76CZX8cbJ4BhTzzA3gU1ZjYZcYW3dwt",

"/ip4/104.131.131.82/tcp/4001/ipfs/QmaCpDMGvV2BGHeYERUEnRQAwe3N8SzbUtfsmvsqQLuvuJ",

"/ip4/104.236.179.241/tcp/4001/ipfs/QmSoLPppuBtQSGwKDZT2M73ULpjvfd3aZ6ha4oFGL1KrGM",

"/ip4/128.199.219.111/tcp/4001/ipfs/QmSoLSafTMBsPKadTEgaXctDQVcqN88CNLHXMkTNwMKPnu",

"/ip4/104.236.76.40/tcp/4001/ipfs/QmSoLV4Bbm51jM9C4gDYZQ9Cy3U6aXMJDAbzgu2fzaDs64",

"/ip4/178.62.158.247/tcp/4001/ipfs/QmSoLer265NRgSp2LA3dPaeykiS1J6DifTC88f5uVQKNAd",

"/ip6/2604:a880:1:20::203:d001/tcp/4001/ipfs/QmSoLPppuBtQSGwKDZT2M73ULpjvfd3aZ6ha4oFGL1KrGM",

"/ip6/2400:6180:0:d0::151:6001/tcp/4001/ipfs/QmSoLSafTMBsPKadTEgaXctDQVcqN88CNLHXMkTNwMKPnu",

"/ip6/2604:a880:800:10::4a:5001/tcp/4001/ipfs/QmSoLV4Bbm51jM9C4gDYZQ9Cy3U6aXMJDAbzgu2fzaDs64",

"/ip6/2a03:b0c0:0:1010::23:1001/tcp/4001/ipfs/QmSoLer265NRgSp2LA3dPaeykiS1J6DifTC88f5uVQKNAd"

],

you can view it when you run ipfs config show

So, when you do ipfs daemon it shows following output:

Initializing daemon... Adjusting current ulimit to 2048... Successfully raised file descriptor limit to 2048. Swarm listening on /ip4/127.0.0.1/tcp/4001 Swarm listening on /ip4/192.168.1.3/tcp/4001 Swarm listening on /ip6/::1/tcp/4001 Swarm listening on /p2p-circuit/ipfs/QmaJcDSAceC6nDZ4CEz8F6mkiGcATjWbCE8JAfbiVxY7oW Swarm announcing /ip4/127.0.0.1/tcp/4001 Swarm announcing /ip4/192.168.1.3/tcp/4001 Swarm announcing /ip4/39.57.97.236/tcp/49388 Swarm announcing /ip6/::1/tcp/4001 API server listening on /ip4/127.0.0.1/tcp/5001 Gateway (readonly) server listening on /ip4/127.0.0.1/tcp/8080 Daemon is ready

You can have a web interface by visiting http://localhost:8080/ipfs by adding Hash of the file object. So for instance when you run ipfs refs local it prints the content exist on your local node:

➜ IPFS ipfs refs local QmdL9t1YP99v4a2wyXFYAQJtbD9zKnPrugFLQWXBXb82sn QmZTR5bcpQD7cFgTorqxZDYaew1Wqgfbd2ud9QqGPAkK2V QmXgqKTbzdh83pQtKFb19SpMCpDDcKR2ujqk3pKph9aCNF QmS4ustL54uo8FzR9455qaxZwuMiUhyvMcX9Ba8nUH4uVv Qma4NNR8dUSDt2BvLYYtgdMLF8J3usKrT9kDFhHzfpB7oq QmNcNo8TXi92Da91fDfzCMbYF5ScaHEJmQG1jqCEbkS7Kt QmYCvbfNbCwFR45HiNP45rwJgvatpiW38D961L5qAhUM5Y QmejvEPop4D7YUadeGqYWmZxHhLc4JBUCzJJHWMzdcMe2y QmPhk6cJkRcFfZCdYam4c9MKYjFG9V29LswUnbrFNhtk2S QmY5heUM5qgRubMDD1og9fhCPA6QdkMp3QCwd4s7gJsyE7 QmSKboVigcD3AY4kLsob117KJcMHvMUu6vNFqk1PQzYUpp QmQ5vhrL7uv6tuoN9KeVBwd4PwfQkXdVVmDLUZuTNxqgvm QmZZRTyhDpL5Jgift1cHbAhexeE1m2Hw8x8g7rTcPahDvo Qme7RW9zfGgYujJt6CJ5yKiMkvV9zPSSkbB4hgipee3j6S QmYQoke9bEqzBLWPGqyjhUYc3TwBEkn4wed2kUmAbxvLFu QmdfTbBqBPQ7VNxZEYEj14VmRuZBkqFbiwReogJgS1zR1n

And you can view the content on your browser by doing http://localhost:8080/ipfs/QmY5heUM5qgRubMDD1og9fhCPA6QdkMp3QCwd4s7gJsyE7 on your browser and can view the content. In my case it is the help file by IPFS itself.

Pinning

When you post a file on IPFS network, it’s spread across the connected nodes. IPFS provides garbage collection concept which eventually let the files get deleted. Or, if your node goes offline and not synced the data will be lost. In order for your data stay there forever, you pin your file by running the command:

ipfs pin add <hash_of_content> where hash of content are the entries you see above. When you do it, it’s not removed due to garbage collection process. You can also unpin by running ipfs pin rm <hash_content>

So far we see how you can interact with IPFS via command line but how to automate it or even use in your python, let’s get into the code!

Development

I am not doing anything fancy, just calling commands we called via CLI. By learning that you can come up with your own Image Hosting Service or Messaging App or your own decentralized Amazon S3!

First install IPFS Python API, run the command pip install ipfsapi

Start local daemon by running ipfs daemon and create a local Python file in your favorite IDE.

import ipfsapi

if __name__ == '__main__':

# Connect to local node

try:

api = ipfsapi.connect('127.0.0.1', 5001)

print(api)

except ipfsapi.exceptions.ConnectionError as ce:

print(str(ce))

If connected you will see ipfsapi.client.Client object at 0x104af5a90 otherwise exception like: ConnectionError: HTTPConnectionPool(host='127.0.0.1', port=5001): Max retries exceeded with url: /api/v0/version?stream-channels=true (Caused by NewConnectionError(': Failed to establish a new connection: [Errno 61] Connection refused',))

OK, it’s connected now let’s add a new file:

new_file = api.add('new.txt')

It will add a new file. If you get a print() of it you can see a return dict object like below:

{'Name': 'new.txt', 'Hash': 'QmWvgsuZkaWxN1iC7GDciEGsAqphmDyCsk3CVHh7XVUUHq', 'Size': '28'}

Now let’s access this file via browser by visiting http://localhost:8080/ipfs/QmWvgsuZkaWxN1iC7GDciEGsAqphmDyCsk3CVHh7XVUUHq/

and it will show the newly added file.

In order to see the content of file you call api.cat('QmWvgsuZkaWxN1iC7GDciEGsAqphmDyCsk3CVHh7XVUUHq') and it will print content of the file, same thing you do via command line. You can learn more about IPFS and Python integration here.

Conclusion

IPFS is a new way to implement web, a new protocol that makes thing scaleable and reliable. You could consider it as a decentralized storage system running on blockchain. As a Python developer you can integrate new or existing Python apps by using these simple to use APIs. Like always, code is available on Github