Ok, so I am going to write the simplest web scraper in Python with the help of libraries like requests and BeautifulSoup. Before I move further, allow me to discuss what’s web/HTML scraping.

What is Web scraping?

According to Wikipedia:

Web scraping (web harvesting or web data extraction) is a computer software technique of extracting information from websites. This is accomplished by either directly implementing the Hypertext Transfer Protocol (on which the Web is based), or embedding a web browser.

So use scraping technique to access the data from web pages and make it useful for various purposes (e.g: Analysis, aggregation etc). Scraping is not the only way to extract data from a website or web application, there are other ways you can achieve the goal, for instance, using API/SDK provided by the application, RSS/Atom feeds etc. If such facilities are already provided, scraping should be the last resort, specially when there are questions about the legality about this activity.

Ok, so I am going to write the scraper in Python. Python is not the only language that can be used for the purpose, almost all languages provide some way to access a webpage and parse HTML. The reason I use Python is my personal choice due to simplicity of the language itself and the available libraries.

Now, I have to pick some web page to fetch required information. I picked OLX for the purpose, the page I am going to scrape is one of the Ad’s detailed page, a Car Ad here.

Suppose I am going to build a price comparison system for the used cars available on OLX. For the purpose I need to have data available in my database. For the purpose I need to have data available periodically in my local database. In order to achieve this task I’d have to go on each car Ad’s page, parse the data and put in local Db. For the simplicity I am not covering crawling listing page and dump data of each item individually.

On the page there are some useful information for my price comparison system: Title, Price, Location, Images, owner name and the Description.

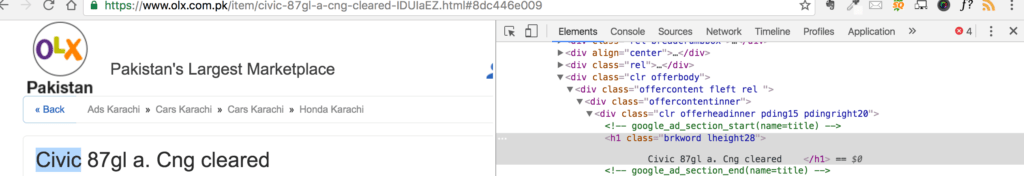

Let’s grab the Title first. One of the prerequisites of writing web scrapper is that you should good enough to use HTML Inspector provided in web browsers because if you don’t know which markup or tag need to be pick you can’t get your required data. This Chrome tutorial should be helpful if you have not used inspectors before. Ok, below is the HTML of the Ad Title:

As you can see, it’s pretty simple, the Ad’s title is written in H1 tag and using css classes brkword lheight28. An important thing: make sure you can narrow down your selection as much as you can, specially when you are picking a single entry because if you just pick a generic HTML tag, there are chances that such tag exist more than once on the page. It is not common for H1 tag but since I found they also specified class names, I picked that as well, in this particular scenario, there’s only one instance of H1 tag so even if I pick H1 tag without classes, I’d get my required info anyway.

Ok, now I am going to write code. The first task is to access the page, check whether it’s available and then access the html of it.

import requests

from bs4 import BeautifulSoup

url = 'https://www.olx.com.pk/item/civic-87gl-a-cng-cleared-IDUIaEZ.html#8dc446e009'

headers = {

'user-agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/53.0.2785.143 Safari/537.36'}

r = requests.get(url, headers=headers)

# make sure that the page exist

if r.status_code == 200:

html = r.text

soup = BeautifulSoup(html, 'lxml')

title = soup.find('h1')

if title is not None:

title_text = title.text.strip()

Here, I access the page by using Python’s requests library, you may use other like urllib as well but since I am fond of simplicity provided by requests, i don’t look for any other library at all.

The page was accessed, I also passed the UserAgent in header. It’s not mandatory but often it happens that site does not let you access the page if certain header are not sent with Http request. Beside that, it’s also good to come out clean and let the server know that you are trying to be nice by providing a userAgent.

After creating the BeautifulSoup object I access H1 tag. There are multiple ways to do it but mostly I rely on select() method since it let you use CSS selectors. This one was simple so I just used find() method. More details given on BS’ documentation website.

You might wonder, why am I verifying whether H1 tag while it’s obvious it’s right there. The reason is, you don’t have control on websites of others. There is probability that they change the markup or site structure for any reason and your code stop working. So the best practise is to check each element’s existence and if it exists then proceed further. It can also help you in logging errors and send notification to site administrators or logging management system so that the problem could be rectify as soon as possible.

Once title object found, I got the text of it and used strip() to remove all whitespace. Never trust the data retrieved from website. You should always clean and transform it based on your needs. Next I got the location which is similar to what I did for Title and then Price.

location = soup.find('strong', {'class': 'c2b small'})

if location is not None:

location_text = location.text.strip()

price = soup.select('div > .xxxx-large')

if price is not None:

price_text = price[0].text.strip('Rs').strip()

Here as you can see I used select() method, it is used to find elements by CSS selectors. I could achieve same result by using find() but just for sake of variety I picked this. The select returns a list element because multiple elements can fulfill the criteria in case of selectors. I picked the one and only element matched the criteria and extracted price. Next, I picked URLs of images and description

images = soup.select('#bigGallery > li > a')

img = [image['href'].strip() for image in images]

description = soup.select('#textContent > p')

if description is not None:

description_text = description[0].text.strip()

# Creating a dictionary Object

item = {}

item['title'] = title_text

item['description'] = description_text

item['location'] = location_text

item['price'] = price_text

item['images'] = img

In last, I created a dictionary object. It is not part of scraping but just to produce data in a standard format for further processing I created it. You may also convert into XML, CSV whatever you want.

The code is available on Github

Writing scrapers is an interesting journey but you can hit the wall if the site blocks your IP. As an individual you can’t afford expensive proxies either. Scraper API provides you an affordable and easy to use API that will let you scrape websites without any hassle. You do not need to worry about getting blocked because Scraper API by default uses proxies to access websites. On top of it you do not need to worry about Selenium either since Scraper API provides the facility of headless browser too. I also have written a post about how to use it.

Click here to signup with my referral link or enter promo code adnan10, you will get a 10% discount on it. In case you do not get the discount then just let me know via email on my site and I’d sure help you out.

Planning to write a book about Web Scraping in Python. Click here to give your feedback

4 Comments

Ben Kairu

Been looking for something like this. Easy to follow and practical. Thanks mate.

admin

Glad you liked it.

adnan

good one .. why not create a script like thing or a software like google addon scrapper.io to make it more easy n useful for non technical persons .. i am sure you can do it ..

admin

will think about it 🙂