In this post, I am going to talk about Proto Buffers and how you can use them in Python for passing messages across networks. Protocol Buffers or Porobuf in short, are used for data serialization and deserialization. Before I discuss Protobuf, I would like to discuss data serialization and serialization first.

Data Serialization and De-serialization

According to Wikipedia

Serialization is the process of translating a data structure or object state into a format that can be stored (for example, in a file or memory data buffer) or transmitted (for example, over a computer network) and reconstructed later (possibly in a different computer environment)

In simple words, you convert simplex and complex data structures and objects into byte streams so that they could be transferred to other machines or store in some storage.

Data deserialization is the reverse process of it to restore the original format for further usage.

Data serialization and vice versa is not something Google invented. Most of the programming languages support it. Python provides pickle, PHP provides serialize function. Similarly, many other languages provide similar features. The issue is that these serialization/deserialization mechanisms are not standardized and only useful if both source and destination are using the same language. There was a need for some standard format that could be used across the system irrespective of the underlying programming language.

You might wonder, hey, why not just use XML or JSON? the issue is that these formats are in text form and they could get huge if the data structure is big and could make things slow if sent across the network. Protobuf on other hand is in binary format and could be smaller in size if a large amount of data is sent.

On another hand, Protobuf is not the first data serialization format that is available in binary format. BSON(Binary JSON) developed by MongoDB and MessagePack also supports such facilities.

Google’s Protobuf is not all about data exchange. It also provides a set of rules to define and exchange these messages. On top of it, it is heavily used in gRPC, Google’s way of Remote Procedure Calls. Hence, if you are planning to work on gRPC in any programming language you must have an idea of writing .proto files.

Installation

In order to use .proto based messages you must have a proto compiler installed on your machine which you can download from here, based on your operating system. Since I am on macOS, I simply used Homebrew to install it. Once installed correctly you can run the command protoc --version to test the version. As of now, the latest version libprotoc 3.9.1 is installed on my machine.

Now let’s write our first proto message.

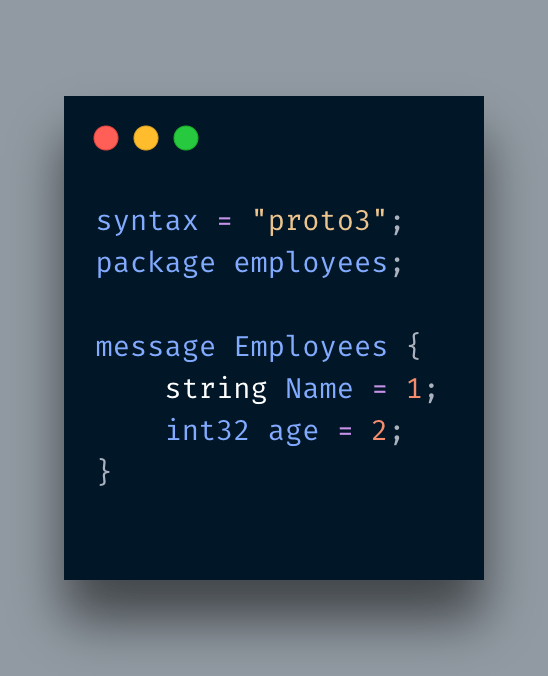

The very first line dictates which version of protobuf message should you use. If you do not specify one it’d be version 2. The second line is about packaging your message to avoid any conflict. For this particular message, you may ignore it. The package line behaves differently in different programming languages after the code generation. In Python, it does not make any difference while in Go and Java languages it is used as a package name while in C++ it is used as a namespace.

The next line starts with message followed by message type. In our case it is Employee. It then consists of fields and their types. For instance, it is string and int32 type fields. If you have worked in languages like C and Go you would find it is similar to struct.

There are two fields mention here: Name of type string and age of type int32. You can make a field mandatory by putting required before the data type name. By default all are optional. There are three types of rules:

- required:- It means this field is mandatory.

- optional:- You may leave this field.

- repeated: This field could be repeated 0 or more times.

You would have noticed that each field is being assigned a number. This is not a typical variable assignment but rather are used for tagging for identification and encoding purpose. Just to clarify this numbering is not for ordering purposes as many suggest, you can’t guarantee in which order these fields will be encoded.

Alright, the message is ready and now we have to serialize it. For that purpose, we will be using protoc a compiler that can generate code in multiple languages. Since I am working in Python so I will be using the following command:

protoc -I=. --python_out=. ./test.proto

- -I is used for the path for finding all kinds of dependencies and proto files. I mentioned a dot(.) for the current directory.

- –python_out is used for the path where the generated python file will be stored. Again, it is being generated in the current folder.

- The last unnamed argument is to pass the proto file path.

When it runs it generates a file in the format <PROTOFILE NAME>_pb2.py. In our case, it is test.proto so the generated file name is test_pb2.py.

You do not need to worry about the generated file at this moment. For now, all you have to be concerned about how to use the file. I am creating a new file, test.py that contains the following code:

import test_pb2 as Test obj = Test.Employees() obj.Name = 'Adnan' obj.age = 20 print(obj.SerializeToString())

It is pretty simple. I imported the generated file and from there I instantiate the Employees object. This Employees comes from the message type we had defined in .proto file. You then simply assign values to fields. The last line is printing the object values. When I run the program it prints:

b'\n\x05Adnan\x10\x14'

What if I set age as a string? It gives the error:

Traceback (most recent call last):

File "test.py", line 5, in <module>

obj.age = "20"

TypeError: '20' has type str, but expected one of: int, long

Awesome, so it is also validating whether the correct data is being passed or not. Now let’s save this output in a binary file.

What if we save something similar in JSON format? The code now looks like below:

import test_pb2 as Test

obj = Test.Employees()

obj.Name = 'Adnan'

obj.age = 20

print(obj.SerializeToString())

with open('output.bin','wb') as f:

f.write(obj.SerializeToString())

json_data = '{"Name": "Adnan","Age": 20}'

with open('output.json','w',encoding='utf8') as f:

f.write(json_data)

Now if I do an ls - la it results the following:

➜ LearningProtoBuffer ls -l output* -rw-r--r-- 1 AdnanAhmad staff 9 May 13 18:05 output.bin -rw-r--r-- 1 AdnanAhmad staff 27 May 13 18:05 output.json

The JSON one has a size of 27 bytes while the protobuf one took 9 bytes only. You can easily figure out who is the winner here. Imagine sending a stream of JSONified data, it’d definitely increase the latency. By sending the data in a binary format you can squeeze many bytes and increase the efficiency of your system.

Conclusion

In this post, you learned how you can efficiently send and store huge data by using Protocol Buffers which generate code in many languages thus make it easier to exchange data without worrying about the underlying programming language.