In this post I am going to discuss how you can write ETL jobs in Python by using Bonobo library. Before I get into the library itself, allow me to discuss about ETL itself and why is it needed?

What is ETL?

ETL is actually short form of Extract, Transform and Load, a process in which data is acquired, changed/processes and then finally get loaded into data warehouse/database(s).

You can extract data from data sources like Files, Website or some Database, transform the acquired data and then load the final version into database for business usage.

You may ask, Why ETL?, well, what ETL does, many of you might already been doing one way or other by writing different functions/scripts to perform tasks but one of the main advantage of ETLs is visualizing your entire data flow pipeline thus help you make decisions according to that.

OK enough talk, let’s get into writing our first ever ETL in Python.

Python Bonobo

The python library I am going to use is bonobo. It’s one of many available libraries out there. The reason to pick is that I found it relatively easy for new comers. It required Python 3.5+ and since I am already using Python 3.6 so it works well for me.

You can install it via pip by issuing the command:

pip install bonobo

Alright, the library is installed and let’s write a very very simple ETL job. I am using the example given on their Github Repo.

import bonobo

def generate_data():

yield 'foo'

yield 'bar'

yield 'baz'

def uppercase(x: str):

return x.upper()

def output(x: str):

print(x)

graph = bonobo.Graph(

generate_data,

uppercase,

output,

)

if __name__ == '__main__':

bonobo.run(graph)

It is not doing something amazing, all it is doing is converting the input data into upper case and then printing it. The visualizing part is left, for that bonobo provides a way to use graphviz library for graphs. Once installed you will execute following two commands: the first one to generate dot file and the next one to generate graph image file from dot file.

bonobo inspect --graph etl.py > etl.dot

and then

dot -o etl.png -T png etl.dot

which will result graph image like below:

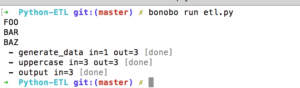

You can also see what happened with your input data by executing bonobo run. For the example above it shows like:

OK so this was a toy example let’s make an ETL of some real data. I am going to make ETL of a a couple of real estate website records. I am using Redfin and Zillow for this post. The goal is to capture price from each site and then transform them into a standardized format for later usage. In our case it’s to convert price string into float after cleaning and save it into a text file. Below is the code:

import bonobo

import requests

from bs4 import BeautifulSoup

def scrape_zillow():

price = ''

status = ''

url = 'https://www.zillow.com/homedetails/41-Norton-Ave-Dallas-PA-18612/2119501298_zpid/'

r = requests.get(url, headers=headers)

if r.status_code == 200:

html = r.text.strip()

soup = BeautifulSoup(html, 'lxml')

price_status_section = soup.select('.home-summary-row')

if len(price_status_section) > 1:

price = price_status_section[1].text.strip()

return price

def scrape_redfin():

price = ''

status = ''

url = 'https://www.redfin.com/TX/Dallas/2619-Colby-St-75204/unit-B/home/32251730'

r = requests.get(url, headers=headers)

if r.status_code == 200:

html = r.text.strip()

soup = BeautifulSoup(html, 'lxml')

price_section = soup.find('span', {'itemprop': 'price'})

if price_section:

price = price_section.text.strip()

return price

def extract():

yield scrape_zillow()

yield scrape_redfin()

def transform(price: str):

t_price = price.replace(',', '').lstrip('$')

return float(t_price)

def load(price: float):

with open('pricing.txt', 'a+', encoding='utf8') as f:

f.write((str(price) + '\n'))

if __name__ == '__main__':

headers = {

'user-agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/56.0.2924.87 Safari/537.36',

'referrer': 'https://google.com'

}

# scrape_redfin()

graph = bonobo.Graph(

extract,

transform,

load,

)

bonobo.run(graph)

I am not touching the scraping part as I already covered it here. Beside that, as you can see that I did nothing fancy. I created two separated functions and then used yield for making them available for ETL, then, I cleaned the data and then load used for saving into the file after transformation into float.

Conclusion

In this post you learnt how you can use bonobo libraries to write ETL jobs in Python language. This was a very basic demo. Visit the official site and see goodies like these as well. Like always, code is available on Github.

(Image Credit: https://www.webopedia.com/imagesvr_ce/5182/etl-diagram.JPG)