Planning to write a book about Web Scraping in Python. Click here to give your feedback

I have been covering web scraping for a long time on this blog for a long time but they were mostly in Python; be it requests, Selenium or Scrapy framework, all were based on Python language but scraping is not limited to a specific language. Any language that provides APIs or libraries for an Http client and HTML parser is able to provide you web scraping facility. Go also provides you the ability to write web scrapers. Go is a compiled and static type language and could be very beneficial to write efficient and quick and scaleable web scrapers. Thanks to Goroutunes that can help you to scrape hundreds of webpages in parallel.

In this introductory post, we are going to discuss the Http library provided by Go to access a webpage or a file. We will also discuss goQuery, an Http client, and an HTML parser similar to jQuery. OK, enough talks, let’s write a basic scraper.

Go HTTP library

I am writing a simple scraper that will be using Go’s net/http library to access my home page. I am writing. I will be using http.Get to access the page. http.Get is the default HTTP client provided by Go with default settings.

package main

import (

"fmt"

"io/ioutil"

"net/http"

)

func getListing(listingURL string) {

response, err := http.Get(listingURL)

if err != nil {

fmt.Println(err)

}

defer response.Body.Close()

if response.StatusCode == 200 {

bodyText, err := ioutil.ReadAll(response.Body)

if err != nil {

fmt.Println(err)

}

fmt.Printf("%s\n", bodyText)

}

}

func main() {

getListing("http://adnansiddiqi.me")

}

When you run this program it prints the HTML of my home page. The function getListing access the URL passed. For that we used http.Get method. The exported function, Get , returns the response object which contains details like HTTP status code, response content itself. Later I used ReadAllof ioutuil to return the text of the response. It’s all good but as I said that Get returns the default settings and you can’t override things like UserAgent, headers, and timeout. The default UserAgent is Go-http-client/1.1 which is not good for the sites who also check UserAgent of the browser. We should have some options to write a scraper that could override UserAgents, timeouts, and also allow to set proxies. Let’s write a real-world scraper.

Creating Yelp Scraper

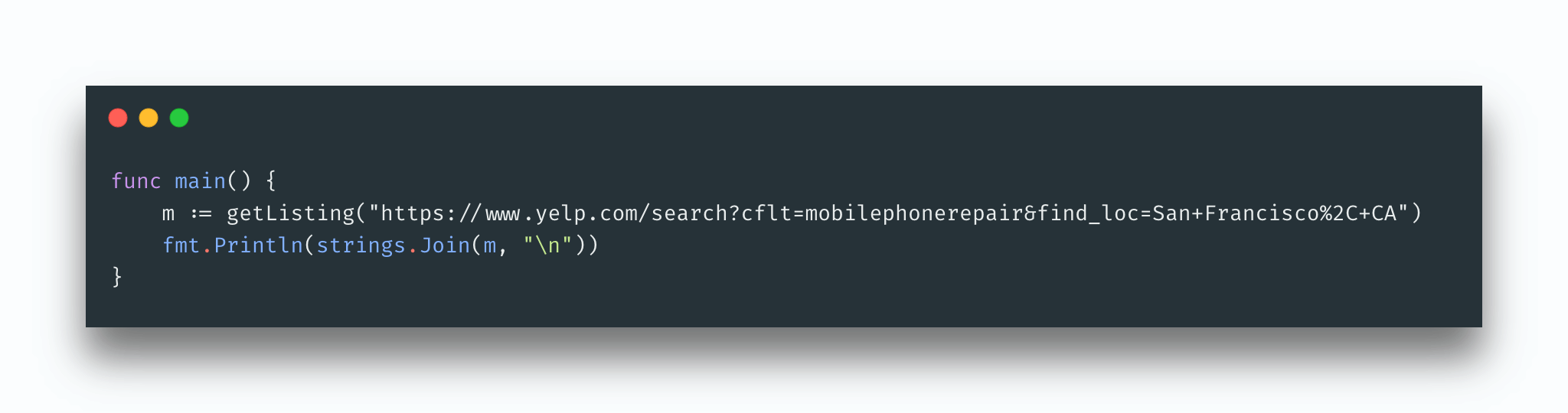

We are now going to create a Yelp scraper in Go/Golang that will scrape the URL of the listings of Mobile Phone Repair shops in San Francisco. We will set up a client first and create a new request. Goquery will be used for HTML parsing.

func getListing(listingURL string) []string {

var links []string

//HTTP client with timeout

client := &http.Client{

Timeout: 30 * time.Second,

}

request, err := http.NewRequest("GET", listingURL, nil)

if err != nil {

fmt.Println(err)

}

//Setting headers

request.Header.Set("pragma", "no-cache")

request.Header.Set("cache-control", "no-cache")

request.Header.Set("dnt", "1")

request.Header.Set("upgrade-insecure-requests", "1")

request.Header.Set("referer", "https://www.yelp.com/")

resp, err := client.Do(request)

if resp.StatusCode == 200 {

doc, err := goquery.NewDocumentFromReader(resp.Body)

if err != nil {

fmt.Println(err)

}

doc.Find(".lemon--ul__373c0__1_cxs a").Each(func(i int, s *goquery.Selection) {

link, _ := s.Attr("href")

link = "https://yelp.com/" + link

// Make sure you we only fetch correct URL with corresponding title

if strings.Contains(link, "biz/") {

text := s.Text()

if text != "" && text != "more" { //to avoid unecessary links

//

links = append(links, link)

}

}

})

}

return links

}

First off, we set up a client variable. Since client is a struct variable, therefore, we are using & to call it by reference. We are setting a timeout to 30 seconds which means it will close the connection after 30 seconds otherwise it will take the default time before getting panic. Next, we create a new request by calling http.NewRequest in which we pass the request method, GET in this case, and the URL. The last parameter is set nil as it is not required in our case. Next, required headers were set, and then the request was made by calling client.Do method.

Once the content was received, we make sure by checking the status code that is 200, we now bring goQuery library for parsing HTML.

doc, err := goquery.NewDocumentFromReader(resp.Body)

Later I use the Find method that accepts a CSS selector and returns elements, if any. The fetched links then appended in an array which is then returned back. In the main print them.

Conclusion

So this was the introductory tutorial about writing web scrapers in Go. Go produces a compiled program so unlike Python you can distribute it to your customers without any hesitation. There is no need of installing a Go runtime either. As always the code is available on Github.

Writing scrapers is an interesting journey but you can hit the wall if the site blocks your IP. As an individual, you can’t afford expensive proxies either. Scraper API provides you an affordable and easy to use API that will let you scrape websites without any hassle. You do not need to worry about getting blocked because Scraper API by default uses proxies to access websites. On top of it, you do not need to worry about Selenium either since Scraper API provides the facility of a headless browser too. I also have written a post about how to use it.

Click here to signup with my referral link or enter promo code adnan10, you will get a 10% discount on it. In case you do not get the discount then just let me know via email on my site and I’d sure help you out.